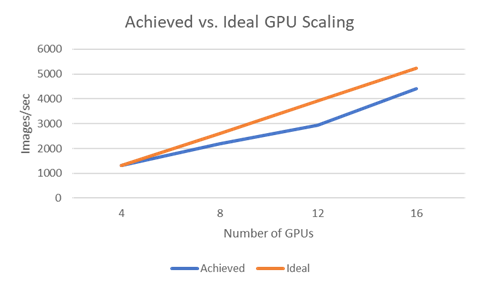

MONAI v0.3 brings GPU acceleration through Auto Mixed Precision (AMP), Distributed Data Parallelism (DDP), and new network architectures | by MONAI Medical Open Network for AI | PyTorch | Medium

Announcing the NVIDIA NVTabular Open Beta with Multi-GPU Support and New Data Loaders | NVIDIA Technical Blog